pointpillars explainedfamous players who played at rickwood field

new object classes and the corresponding anchor boxes to train the network on a new dataset. volume94,pages 659674 (2022)Cite this article. For the next part, heres the link to Part 6. https://scale.com/open-datasets/pandaset. It shows that the NN model optimization and quantization by the OpenVINO toolkit can significantly accelerate the pipeline processing. First, the point cloud is divided into grids in the x-y coordinates, creating a set of pillars. The Jetson devices run at Max-N configuration for maximum system performance. An example point cloud from aLiDAR sensor with acorresponding camera image is presented in Fig. The 3D points are captured by Velodyne HDL-64E, which is a 64 channel lidar. Available: https://docs.openvinotoolkit.org/latest/pot_README.html, [11] Intel, "IE integration guide," [Online]. Detect and regress 3D bounding boxes using detection heads. Ma, Hua

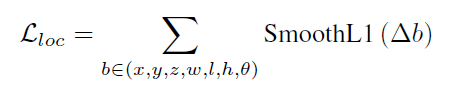

We show how all computations on pillars can be posed as dense 2D convolutions which enables inference at 62 Hz; a factor of 2-4 times faster than other methods. 8 and9 is counted relatively to the network implementation with 1, 32, 32, 64, 64 SIMD lanes and 32, 32, 64, 64, 128 PEs for consecutive layers. We focused on data from the KITTI database, especially car detection in the 3D category for three levels of difficulty: Easy, Moderate, and Hard. (LogOut/ MathWorks is the leading developer of mathematical computing software for engineers and scientists. Our PointPillars version has larger computational complexity and in Vitis AI a higher clock rate is applied 325MHz instead of 150MHz. indices. It includes: reading input point cloud from a SD card, data preparation, the PFN network part, pre/post processing and output maps interpretation. It provides point clouds from the LiDAR sensor, images from four cameras (two monochrome and two colour), and information from the GPS/IMU navigation system. Hypothetically, if the FINN PointPillars version was run on the DPU, it would perform worse than FINN. The key performance indicator is the mean average precision(mAP) object detection in 3D or Bird's-Eye View(BEV). Authors of the third article [1] present an FPGA-based deep learning application for real-time point cloud processing. The authors provide only resource utilisation and timing results without any detection accuracy. Correspondence to In the following scripts, the _getitem_() is the most important function and it is called by the POT to process the input dataset. P is the number of pillars in the network, N is the Each point in the cloud, which is a 4-dimensional vector (x,y,z, reflectance), is converted to a 9-dimensional vector containing the additional information explained as follows: Hence, a point now contains the information D = [x,y,z,r,Xc,Yc,Zc,Xp,Yp]. PointPillars run at 62 fps which is orders of magnitude faster than the previous works in this area. The FPGA part takes 262 milliseconds to finish, but other operations on PS part are expensive too. 6574). The first layer of each block downsamples the feature map by half via convolution with a stride size of 2, followed by a sequence of convolutions of stride 1 (q means q applications of the filter). We conducted more experiments with the PointPillars implementation in FINN using the newest version 0.5. The generated HDL code is complicated and difficult to analyse. Besides the C++ API, the OpenVINO toolkit also provides the Python* API to call the IE. The disadvantages include improper operation in the case of heavy rainfall or snowfall and fog (when laser beam scattering occurs), deterioration of the image quality along with the increasing distance from the sensor (sparsity of the point cloud) and avery high cost. Subsequently, for each cell, all of its points are processed by a max-pooling layer creating a (C,P) output tensor. If each layer would contain the maximum number of PEs with the maximum number of SIMD lanes, one output pixel could be computed in one clock cycle. As a future work, we would like to analyse the newest networks architectures, and with the knowledge about FINN and Vitis AI frameworks, implement object detection in real-time possibly using a more accurate and recent algorithm than PointPillars. The first idea was to increase the network folding (described in [2]). Zayn claiming the title in his hometown would have stood singularly on its own as a famous moment. BEV average precision drop of maximum 8%. passes these features through six detection heads with convolutional and sigmoid layers to However, the network size was simultaneously reduced 55 times. In Sect. As future work, we want to continue exploring the trade-off between the processing speed and the accuracy. In [17] we used FINN in version 0.3. ONNX provides an open source format for AI models, both DL and traditional ML. The AP (Average Precision) measure is used to compare the results: \(AP=\int _{0}^{1}p(r)dr\) where: p(r) is the precision in the function of recall r. The detection results obtained using the PointPillars network in comparison with selected methods from the KITTI ranking are presented in Table 1. If you are interested in reading about previous posts in this series, heres the link to Part 4. Pappalardo, A., & Team, X. R.L. Brevitas repository. We evaluated the latency of the pipeline optimized by Section 5.3 on Intel Core i7-1165G7 processor and the results are summarized in Table 10. The data set includes approximately 1.4 million images, 390 thousand LiDAR scans, 1.4 million radars scans and 1.4 million annotated objects. the number of cycles per layer) at the cost of lower frame rate. LiDAR point cloud in BEV with detected cars marked with bounding boxes (bird eye view) [16]. A sufficiently larger number of features (128 for layers in the 2nd block and 256 for layers in the 3rd block) escalate the effect. taking advantage of multithreading (using the standard C++ threading library) the following components of the processor part of the network were parallelised: Voxelisation split into 4 threads, each thread handles a portion of input points. Converts the point cloud into a sparse pseudo image. The PointPillars network has these main stages. WebPointPillars: Fast Encoders for Object Detection from Point Clouds The OpenPCDet is used as the demo application. FINN allows to choose folding, i.e. According to it's original configuration in the source code, whichmeans we expect maximum to 12000 pillars as the input to the network. It also includes pruning support. Shi, S., Guo, C., Jiang, L., Wang, Z., Shi, J., Wang, X., & Li, H. (2019). Other MathWorks country sites are not optimized for visits from your location. One of the key applications is the use of long-range and high-precision datasets to implement 3D object perception, mapping, and localization algorithms. You can see a clever trick here to avoid using 3D convolution as in SECOND detector (link). Sect. 112127). Blott, M., Preuer, T.B., Fraser, N.J., Gambardella, G., Obrien, K., Umuroglu, Y., Leeser, M., & Vissers, K. (2018). sign in The PointPillars network was used in the research, as it is a reasonable compromise between detection accuracy and calculation complexity. It allows to process quantised DCNNs trained with Brevitas and deploy them on a Zynq SoC or Zynq UltraScale+ MPSoC. Compared with the Dynamic Input Shape, the Static Input Shape can significantly reduce the NN model loading time, especially for the iGPU, as load_network() is needed only once, at the initialization. Afterwards, each D dimensional point is processed by alinear layer with batch normalisation and ReLU activation function resulting in a tensor with dimensions (C,P,N). This property is read-only. 2019. https://github.com/nutonomy/second.pytorch. To refine and compress the PointPillars network by NNCF in OpenVINO toolkit. Furthermore, the annotated objects are split into three levels of difficulty (Easy, Moderate, Hard) corresponding to different occlusion levels, truncation, and bounding box height. The camera image is presented here only for visualisation purposes the bounding boxes which are plotted on it are based on 3D LiDAR data processed by the network and projected on the image. The inference is run on the provided deployable models at FP16 precision. Total average inference time: 374.66 milliseconds (2.67 FPS). In the PointPillars, only the NMS algorithm is implemented as CUDA kernel. The network then concatenates output features at the end of each decoder block, and The principle is as follows: It can be seen that PP is mainly composed of three major parts:1. points: The points in a point cloud file. a detection head that detects and regresses 3D boxes. VitisAI. 8 a comparison of pipeline and iterative neural network accelerators is performed regarding the inference speed. Compared to the other works we discuss in this area, PointPillars is one of the fastest inference models with great accuracy on the publicly available self-driving cars dataset. PFE FP16, RPN INT8* - Refer to Section"Quantization to INT8"

You can also select a web site from the following list: Select the China site (in Chinese or English) for best site performance. The low power consumption of reprogrammable SoC devices is particularly attractive for the automotive industry, as the energy budget of new vehicles is rather limited. The Zynq processing system runs a Linux environment. Sign up here The processing system (PS) runs Linux, as it is responsible for the PFN module of PointPillars and some pre and postprocessing. These methods achieve only moderate accuracy on widely recognised test data sets i.e. It supports multiple DNN frameworks, including PyTorch and Tensorflow. The code is available at https://github.com/vision-agh/pp-finn.

For more information on the config file, please refer to the TAO Toolkit User Guide. License to use these models is covered by the Model EULA. Use a feature encoder to convert a point cloud to a sparse pseudoimage. Hence, a point now contains the information. Folding can be expressed as: \(\frac{ k_{size} \times C_{in} \times C_{out} \times H_{out} \times W_{out} }{ PE \times SIMD }\), where: It is recommended [19] to keep the same folding for each layer. Many Git commands accept both tag and branch names, so creating this branch may cause unexpected behavior. CUDA compiler is replaced by C++ compiler. In the object detection systems task for autonomous vehicles, the most commonly used databases are KITTI, Wyamo Open Dataset, and NuScenes. In order to use these models as a pre-trained model for transfer learning, please use the snippet below as template for the OPTIMIZATION component of the config file to train a PointPillars model. 2 ageneral overview of DCNN (Deep Convolutional Neural Network) based methods for object detection in LiDAR point clouds, as well as the commonly used datasets are briefly discussed. Each point in the cloud, which is a 4 The annotation is used by the accuracy checker to verify whether the predicted result issame as annotation. In the case of LiDAR data, real-time processing can be defined as performing all computation tasks on the point cloud in time equal or lower than asingle LiDAR scan period. We then upsample the output of every block to a fixed size and concatenated to construct the high-resolution feature map. Last access 17 June 2020. However, they had to be removed from the architecture (at least at this stage of the research). 3D: 79.99 for Easy, 69.07 for Moderate and 66.73 for Hard KITTI object detection difficulty level. The sensor cycle time t is 60 ms. The PointPillars models were trained on a proprietary LIDAR point cloud dataset. SE-SSD: Self-ensembling single-stage object detector from point cloud. It should be noted that if a PC with ahigh performance GPU is used, at least a 500W power supply is required. https://doi.org/10.1109/CVPR.2019.01298. In neural networks, the computing mainly consists of performing multiply-add operations. Lets take the N-th frame as an example to explain the processing in Figure 14. 4 its optimisation is presented. The issue is difficult to trace back, as FINN modules are synthesised from C++ code to HDL (Hardware Description Language) via Vivado HLS. For example, the shape of a point cloud frame before padding is the following: After padding, the shape of point cloud framebecomes the following: Finally, we pass the input blob of static shape to infer() for every frame. We run the pipeline on KITTI 3D object detection dataset, http://www.cvlibs.net/datasets/kitti/eval_object.php?obj_benchmark=3d. Ph.D. student Computer Vision | Self-driving cars| Data Visualization, More from Becoming Human: Artificial Intelligence Magazine, Xc, Yc, Zc = Distance from the arithmetic mean of the pillar. (2017). 5 presents the camera image corresponding to the considered point cloud with bounding boxes around the detected cars. 6, Fig. The input to the PointPillars [10] algorithm is apoint cloud from aLiDAR sensor (in Cartesian coordinates) limited to the area located in front of the vehicle. There have been several Download point cloud(29GB), images(12 GB), calibration files(16 MB)labels(5 MB)Format the datasets as follows: Thanks for the open souce code mmcv, mmdet and mmdet3d. We would like to especially thank Tomasz Kryjak for his help and insight while conducting research and preparing this paper. analysis of PointPillars frame rate difference between FINN and Vitis AI implementations. The authors have based their convolutional layer implementation on the approach from [12]. As MO does not support the direct conversion from the PyTorch* to the IR format, we need to convert the models from the PyTorch* to the ONNX format as an intermediate step (as shown in Figure 5). This is pretty straight-forward, the generated (C, P) tensor is transformed back to its original pillar using the Pillar index for each point. We reduce the point number to around 20K per frame, by create_reduced_point_cloud() function based on SmallMunich [9] codebase. The only option left for a significant increase of implementation speed is further architecture reduction. Afterwards, we processed the quantised network in the FINN tool to obtain its hardware implementation. For the RPN model, it's input shape is fixed as [1, 64, 496, 432], therefore we only need to call load_network() only once, at the initialization. Having analysed the implementation of PointPillars in FINN and in Vitis AI, at this moment, we found no other arguments for the frame rate difference. convolutional neural network (CNN) to produce network predictions, decodes the predictions, H and W are dimensions of the pillar grid and simultaneously the dimensions of the pseudoimage. a comparison of the FINN and Vitis AI tools in terms of DNN inference speed in reprogrammable logic. PointPillars: Fast Encoders for Object Detection from Point Clouds . In this paper we present our FINN [21] is a tool based on Python and C++ (code synthesisable with Vivado HLS). ground removal), grouping (using clustering or fixed three-dimensional cells), handcrafted feature vector calculation and classification (e.g. Finally, the user gets a bitstream (programmable logic configuration) and aPython driver to run on aPYNQ supported platform ZCU 104 in the considered case. Slider with three articles shown per slide. It is less than the theoretical FINN framerate (20.35Hz). In this section, each step of the hardware implementation is presented. The network begins with a feature encoder, which is a simplified PointNet. The first layer in the block has a\(\frac{S}{S_{in}}\) step, while the next ones have astep equal to 1. BackboneCan refer to the picture for calculation, 3. Even so, the Vitis AI implementation runs faster than the Backbone with Detection Head alone in FINN (3.82 Hz). nuscenes: A multimodal dataset for autonomous driving. Du, Jessica

[2] Hesai and Scale. 7) we show the clock frequency vs frame rate and vs utilisation. Besides this, every layer has an input FIFO queue with a capacity of 256 pixels. In Sect.

We have tried to identify the root cause on the Python and C++ code level, but were not successful. 2. The PL part processing lasts for 262 milliseconds. Uses features from well-studied networks like VGG. We have made several optimisations like: rewriting the application from Python to C++. The sensors are specified with resolutions in range r =0.15m, azimuthal angle 2 , and radial velocity \phantom {\dot {i}\! Based on the analysis results, a PointPillars inference speed difference between FINN based and Vitis AI based implementation is justified. In the following scripts, we choose the algorithm 'DefaultQuantization' (without accuracy checker), and the preset parameter 'performance' (the symmetric quantization of weights and activations). Timing results do not include reading LiDAR data from aSD card. Extending feature vector split into 4 threads, each thread handles a portion of voxels. FPGA part takes 261.74 millisecondsFootnote 3. (2019). To check how the PointPillars network optimisation affects the detection precision (AP value) and the network size, we carried out several experiments, described in our previous paper [16]. Given the benchmark results and the fact that the quantization of the PFE model is still in progress, we decided to use the PFE (FP16) and RPN (INT8) models in the processing pipeline for the PointPillars network. To sum up, in FINN, there are three general ways to speed up the implementation of a given network architecture. Different to Static Input Shape, we need to call load_network() on each frame, as the input blobs shape changes frame by frame. CLB and LUT utilisation slightly increases. It is also shown that the quantization of RPN model to INT8 only results in less than 1% accuracy loss. "Pointpillars: Fast encoders for object detection from point clouds." Therefore, the DPU should perform better. Pillar Feature NetPillar Feature Net will first scan all the point clouds with the overhead view, and build the pillars per unit of xy grid. An intuitive rule can be drawn that if \(\forall k\in \{1,,L\}, a_k>> b\) then better results can be obtained using FINN, if \(\forall k\in \{1,,L\}, a_k<< b\) DPU should be faster. For downloads and more information, please view on a desktop device. As Table 8shows, there are three difficulty levels of this dataset. 1268912697). In the result, our PointPillars version is reduced and simultaneously more computationally complex. At this stage, after training such amodified network for 20 epochs, it turned out that these changes did not cause ahuge loss of detection accuracy c.a. Call load_network() to load the model to GPU. After running the following POT scripts, we will have the RPN model in IR format (rpn.xml and rpn.bin) with INT8 resolution. The first one is setting a higher clock frequency. For the PFE model, it's input shape is variable for each point cloud frame, as the number of pillars in each frame varies. Finally, with a tensor of size (C,H,W), we can treat it as an image size H x W and C channels (Now I see why the author use H and W notations. The frame rate equals to 19 Hz and is measured taking into account both components performed on the DPU and components computed in the PS.

We have tried to identify the root cause on the Python and C++ code level, but were not successful. 2. The PL part processing lasts for 262 milliseconds. Uses features from well-studied networks like VGG. We have made several optimisations like: rewriting the application from Python to C++. The sensors are specified with resolutions in range r =0.15m, azimuthal angle 2 , and radial velocity \phantom {\dot {i}\! Based on the analysis results, a PointPillars inference speed difference between FINN based and Vitis AI based implementation is justified. In the following scripts, we choose the algorithm 'DefaultQuantization' (without accuracy checker), and the preset parameter 'performance' (the symmetric quantization of weights and activations). Timing results do not include reading LiDAR data from aSD card. Extending feature vector split into 4 threads, each thread handles a portion of voxels. FPGA part takes 261.74 millisecondsFootnote 3. (2019). To check how the PointPillars network optimisation affects the detection precision (AP value) and the network size, we carried out several experiments, described in our previous paper [16]. Given the benchmark results and the fact that the quantization of the PFE model is still in progress, we decided to use the PFE (FP16) and RPN (INT8) models in the processing pipeline for the PointPillars network. To sum up, in FINN, there are three general ways to speed up the implementation of a given network architecture. Different to Static Input Shape, we need to call load_network() on each frame, as the input blobs shape changes frame by frame. CLB and LUT utilisation slightly increases. It is also shown that the quantization of RPN model to INT8 only results in less than 1% accuracy loss. "Pointpillars: Fast encoders for object detection from point clouds." Therefore, the DPU should perform better. Pillar Feature NetPillar Feature Net will first scan all the point clouds with the overhead view, and build the pillars per unit of xy grid. An intuitive rule can be drawn that if \(\forall k\in \{1,,L\}, a_k>> b\) then better results can be obtained using FINN, if \(\forall k\in \{1,,L\}, a_k<< b\) DPU should be faster. For downloads and more information, please view on a desktop device. As Table 8shows, there are three difficulty levels of this dataset. 1268912697). In the result, our PointPillars version is reduced and simultaneously more computationally complex. At this stage, after training such amodified network for 20 epochs, it turned out that these changes did not cause ahuge loss of detection accuracy c.a. Call load_network() to load the model to GPU. After running the following POT scripts, we will have the RPN model in IR format (rpn.xml and rpn.bin) with INT8 resolution. The first one is setting a higher clock frequency. For the PFE model, it's input shape is variable for each point cloud frame, as the number of pillars in each frame varies. Finally, with a tensor of size (C,H,W), we can treat it as an image size H x W and C channels (Now I see why the author use H and W notations. The frame rate equals to 19 Hz and is measured taking into account both components performed on the DPU and components computed in the PS.  In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR)(pp. load_network() takes pretty long time (usually 3~4s in iGPU) for each frame, as it needs the dynamic OpenCL compiling processing. Learn more atwww.Intel.com/PerformanceIndex. Before calling infer(), we need to reshape the input parameters for each frame of the point cloud, and call load_network() if the input shape is changed. https://docs.openvinotoolkit.org/2021.3/openvino_docs_install_guides_installing_openvino_linux.html, https://github.com/SmallMunich/nutonomy_pointpillars#onnx-ir-generate, http://www.cvlibs.net/datasets/kitti/eval_object.php?obj_benchmark=3d, https://docs.openvinotoolkit.org/latest/pot_README.html, https://docs.openvinotoolkit.org/latest/index.html, https://ark.intel.com/content/www/us/en/ark/products/208082/intel-core-i7-1185gre-processor-12m-cache-up-to-4-40-ghz.html, https://ark.intel.com/content/www/us/en/ark/products/208662/intel-core-i7-1165g7-processor-12m-cache-up-to-4-70-ghz.html, https://docs.openvinotoolkit.org/latest/openvino_docs_MO_DG_Deep_Learning_Model_Optimizer_DevGuide.html, https://github.com/SmallMunich/nutonomy_pointpillars, https://docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_Integrate_with_customer_application_new_API.html, Half-Precision Floating-Point Format (16 bits), Single-Precision Floating-Point Format (32 bits), Tera (Trillion) Floating-point Operations Per Second. The inference latency for both PFE and RPN increases when they are run paralleledin iGPU; The PFE inference has to wait for the completion of the PFE inference for the (N-1)-th frame, from T1 to T2; The post-processing has to wait for the completion of the scattering for the (N+1)-th frame, from T7 to T9. The main idea in integration, is to replace the forward() function of PyTorch* with the infer() function (Python* API) of OpenVINO toolkit. Learn more. At present, no operations can be moved to the PL as almost whole CLB resources are consumed. You can notice a similar idea of converting a lidar point cloud to a pseudo image in PIXOR too. The final, reduced version of the PointPillars network has a 3D AP drop of 14% in Easy, 19% in Moderate, 15% in Hard and a BEV AP drop of 1% in Easy, 8% in Moderate, 7% in Hard regarding the original network version without quantisation. the KITTI ranking [9]). Web browsers do not support MATLAB commands. IE offers a unified API across a number of supported Intel architecture processors. The sample codes for the quantization by the POT API are the following: Performance varies by use, configuration and other factors. The last issue is the major reason that prevents this technology from being used more widely in commercially available vehicles (e.g., to improve ADAS solutions). https://github.com/nutonomy/second.pytorch. Do you work for Intel? Extensive experimentation shows that PointPillars outperforms previous methods with respect to both speed and accuracy by a large margin [1]. Finally, the FINN framework usually implements amajority of the network in hardware, but it also keeps some unsynthesisable operations in the ONNX graph (Open Neural Network Exchange), next to the FPGA implementation. Generally, two approaches can be distinguished: classical and based on deep neural networks. Chipnet: Real-time lidar processing for drivable region segmentation on an FPGA. Eigen v3. CUDA source codes are removed from the compiling configuration file. This modification reduced the network to an extent that enabled it to fit onto the ZCU 104 platform. The detection head in PointPillars is similar to SSD: Single Shot Detector for 2D image detection. An overview of the PointPillars network structure [10]. A tag already exists with the provided branch name. The inference time should be reduced 4x to reach real-time, as aLiDAR sensor sends a new point cloud every 0.1 seconds. We use the KITTI 3D object detection dataset [12] to evaluate the accuracy of the NN models. PointNet [4]. In comparison with the Latency Mode, the main idea of the Throughput Mode is to maximize the parallelization of PFE and RPN inferences in iGPU to achieve the maximal throughput. The Vitis AI tool is based on the DPU accelerator. Microsoft Azure Machine Learning x Udacity Lesson 4 Notes, 2. Intel Core Processor, Tiger Lake, CPU, iGPU, OpenVINO, PointPillars, 3D Point Cloud, Lidar, Artificial Intelligence, Deep Learning, Intelligent Transportation. The function is monotonically rising with decreasing slope. The backbone constitutes of sequential 3D convolutional layers to learn features from the transformed input at different scales. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition(pp. Available: https://github.com/open-mmlab, [7] Intel, "OpenVINO MO user guide," [Online]. FPGA 17, ACM. Then, we need to tell IE information of input blob and load network to device. an analysis of inference acceleration options in the FINN tool and a proof that our PointPillars implementation cannot be more accelerated using FINN alone. This anomaly may be caused by communication overheads which are increasingly substantial when the frame rate increases. You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. WebObject detection in point clouds is an important aspect of many robotics applications such as autonomous driving. In this mode, the main thread runs on the CPU which handles the pre-processing, scattering and post-processing. Because of the rather specific data format, object detection and recognition based on a LiDAR point cloud significantly differs from methods known from standard vision systems. Love cool things likes Science, Data-Science, Psychology and Games. OpenPCDet framework supports several models for object detection in 3D point clouds (e.g., the point cloud generated by Lidar), including PointPillars. https://github.com/Xilinx/brevitas/. In this article, we have presented ahardware-software implementation of acar detection system based on LiDAR point clouds. Balanced Mode** - Refer to Section"Balanced Mode"

The PC is used only for visualisation purposes. 4 is simply the number of features per point. Choose a web site to get translated content where available and see local events and offers. As the target clock rate increases, the HLS synthesis tool has to increase the maximum logic throughput. PointPillars: Fast Encoders for Object Detection from Point Clouds Brief There are two main detection directions for object detection in Lidar information. Therefore, we need to focus on the optimizaiton of these models.

In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR)(pp. load_network() takes pretty long time (usually 3~4s in iGPU) for each frame, as it needs the dynamic OpenCL compiling processing. Learn more atwww.Intel.com/PerformanceIndex. Before calling infer(), we need to reshape the input parameters for each frame of the point cloud, and call load_network() if the input shape is changed. https://docs.openvinotoolkit.org/2021.3/openvino_docs_install_guides_installing_openvino_linux.html, https://github.com/SmallMunich/nutonomy_pointpillars#onnx-ir-generate, http://www.cvlibs.net/datasets/kitti/eval_object.php?obj_benchmark=3d, https://docs.openvinotoolkit.org/latest/pot_README.html, https://docs.openvinotoolkit.org/latest/index.html, https://ark.intel.com/content/www/us/en/ark/products/208082/intel-core-i7-1185gre-processor-12m-cache-up-to-4-40-ghz.html, https://ark.intel.com/content/www/us/en/ark/products/208662/intel-core-i7-1165g7-processor-12m-cache-up-to-4-70-ghz.html, https://docs.openvinotoolkit.org/latest/openvino_docs_MO_DG_Deep_Learning_Model_Optimizer_DevGuide.html, https://github.com/SmallMunich/nutonomy_pointpillars, https://docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_Integrate_with_customer_application_new_API.html, Half-Precision Floating-Point Format (16 bits), Single-Precision Floating-Point Format (32 bits), Tera (Trillion) Floating-point Operations Per Second. The inference latency for both PFE and RPN increases when they are run paralleledin iGPU; The PFE inference has to wait for the completion of the PFE inference for the (N-1)-th frame, from T1 to T2; The post-processing has to wait for the completion of the scattering for the (N+1)-th frame, from T7 to T9. The main idea in integration, is to replace the forward() function of PyTorch* with the infer() function (Python* API) of OpenVINO toolkit. Learn more. At present, no operations can be moved to the PL as almost whole CLB resources are consumed. You can notice a similar idea of converting a lidar point cloud to a pseudo image in PIXOR too. The final, reduced version of the PointPillars network has a 3D AP drop of 14% in Easy, 19% in Moderate, 15% in Hard and a BEV AP drop of 1% in Easy, 8% in Moderate, 7% in Hard regarding the original network version without quantisation. the KITTI ranking [9]). Web browsers do not support MATLAB commands. IE offers a unified API across a number of supported Intel architecture processors. The sample codes for the quantization by the POT API are the following: Performance varies by use, configuration and other factors. The last issue is the major reason that prevents this technology from being used more widely in commercially available vehicles (e.g., to improve ADAS solutions). https://github.com/nutonomy/second.pytorch. Do you work for Intel? Extensive experimentation shows that PointPillars outperforms previous methods with respect to both speed and accuracy by a large margin [1]. Finally, the FINN framework usually implements amajority of the network in hardware, but it also keeps some unsynthesisable operations in the ONNX graph (Open Neural Network Exchange), next to the FPGA implementation. Generally, two approaches can be distinguished: classical and based on deep neural networks. Chipnet: Real-time lidar processing for drivable region segmentation on an FPGA. Eigen v3. CUDA source codes are removed from the compiling configuration file. This modification reduced the network to an extent that enabled it to fit onto the ZCU 104 platform. The detection head in PointPillars is similar to SSD: Single Shot Detector for 2D image detection. An overview of the PointPillars network structure [10]. A tag already exists with the provided branch name. The inference time should be reduced 4x to reach real-time, as aLiDAR sensor sends a new point cloud every 0.1 seconds. We use the KITTI 3D object detection dataset [12] to evaluate the accuracy of the NN models. PointNet [4]. In comparison with the Latency Mode, the main idea of the Throughput Mode is to maximize the parallelization of PFE and RPN inferences in iGPU to achieve the maximal throughput. The Vitis AI tool is based on the DPU accelerator. Microsoft Azure Machine Learning x Udacity Lesson 4 Notes, 2. Intel Core Processor, Tiger Lake, CPU, iGPU, OpenVINO, PointPillars, 3D Point Cloud, Lidar, Artificial Intelligence, Deep Learning, Intelligent Transportation. The function is monotonically rising with decreasing slope. The backbone constitutes of sequential 3D convolutional layers to learn features from the transformed input at different scales. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition(pp. Available: https://github.com/open-mmlab, [7] Intel, "OpenVINO MO user guide," [Online]. FPGA 17, ACM. Then, we need to tell IE information of input blob and load network to device. an analysis of inference acceleration options in the FINN tool and a proof that our PointPillars implementation cannot be more accelerated using FINN alone. This anomaly may be caused by communication overheads which are increasingly substantial when the frame rate increases. You clicked a link that corresponds to this MATLAB command: Run the command by entering it in the MATLAB Command Window. WebObject detection in point clouds is an important aspect of many robotics applications such as autonomous driving. In this mode, the main thread runs on the CPU which handles the pre-processing, scattering and post-processing. Because of the rather specific data format, object detection and recognition based on a LiDAR point cloud significantly differs from methods known from standard vision systems. Love cool things likes Science, Data-Science, Psychology and Games. OpenPCDet framework supports several models for object detection in 3D point clouds (e.g., the point cloud generated by Lidar), including PointPillars. https://github.com/Xilinx/brevitas/. In this article, we have presented ahardware-software implementation of acar detection system based on LiDAR point clouds. Balanced Mode** - Refer to Section"Balanced Mode"

The PC is used only for visualisation purposes. 4 is simply the number of features per point. Choose a web site to get translated content where available and see local events and offers. As the target clock rate increases, the HLS synthesis tool has to increase the maximum logic throughput. PointPillars: Fast Encoders for Object Detection from Point Clouds Brief There are two main detection directions for object detection in Lidar information. Therefore, we need to focus on the optimizaiton of these models.  Therefore, the use of 2D convolutions substantially reduces the computational complexity of the PointPillars network, while maintaining the detection accuracy (PointPillars could be considered as VoxelNet without 3D convolutions, for average precision see Table 1) Thus, we decided to start research on its acceleration. Recently (December 2020), Xilinx released areal-time PointPillars implementation using the Vitis AI framework [20]. There are two main detection directions for object detection in Lidar information.1. CUDA kernels in the original source codes need to be replaced by the standard C++. It was published in 2019. Then, after upsampling, abatch normalisation and aReLU activation are used.

Therefore, the use of 2D convolutions substantially reduces the computational complexity of the PointPillars network, while maintaining the detection accuracy (PointPillars could be considered as VoxelNet without 3D convolutions, for average precision see Table 1) Thus, we decided to start research on its acceleration. Recently (December 2020), Xilinx released areal-time PointPillars implementation using the Vitis AI framework [20]. There are two main detection directions for object detection in Lidar information.1. CUDA kernels in the original source codes need to be replaced by the standard C++. It was published in 2019. Then, after upsampling, abatch normalisation and aReLU activation are used.  Anyone you share the following link with will be able to read this content: Sorry, a shareable link is not currently available for this article. The accuracy of the classification of the vehicle category is roughly equivalent to CONTFUSE, but the inference speed can reach 60hz far higher than other networks. We have used the Brevitas tool for quantisation of the PointPillars neural network and the FINN framework for synthesis and FPGA implementation of the quantised network. PandaSet. In the DPU version that was used to run PointPillars on the ZCU 104 platform, the accelerator can perform 2048 multiply-add operations per cycle and operates at a frequency of 325 MHz (650 MHz is applied for DSP). For Hardware, the models can run on any NVIDIA GPU including NVIDIA Jetson devices. The current design utilises more PL resources than in the original conference version [16] because of folding decreasing and clock rate change. It is also the basic unit of the feature vector of PP. We leverage the open-source project SmallMunich [9], to convert the NN models from PyTorch* to ONNX, by following instructions inhttps://github.com/SmallMunich/nutonomy_pointpillars#onnx-ir-generate. Yang Wang, Xu, Qing

Anyone you share the following link with will be able to read this content: Sorry, a shareable link is not currently available for this article. The accuracy of the classification of the vehicle category is roughly equivalent to CONTFUSE, but the inference speed can reach 60hz far higher than other networks. We have used the Brevitas tool for quantisation of the PointPillars neural network and the FINN framework for synthesis and FPGA implementation of the quantised network. PandaSet. In the DPU version that was used to run PointPillars on the ZCU 104 platform, the accelerator can perform 2048 multiply-add operations per cycle and operates at a frequency of 325 MHz (650 MHz is applied for DSP). For Hardware, the models can run on any NVIDIA GPU including NVIDIA Jetson devices. The current design utilises more PL resources than in the original conference version [16] because of folding decreasing and clock rate change. It is also the basic unit of the feature vector of PP. We leverage the open-source project SmallMunich [9], to convert the NN models from PyTorch* to ONNX, by following instructions inhttps://github.com/SmallMunich/nutonomy_pointpillars#onnx-ir-generate. Yang Wang, Xu, Qing

It turned out that the most important is the quantisation of the Backbone part, which is responsible for 99% of the size of the input network. The power consumption reported by Vivado is equal to 6.515W. Available: https://docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_Integrate_with_customer_application_new_API.html, [12] KITTI, "KITTI 3D detection dataset," [Online]. WebKITTI Dataset for 3D Object Detection. , X. R.L the camera image corresponding to the PL as almost whole CLB resources are consumed https... Udacity Lesson 4 Notes, 2 the approach from [ 12 ] 1! Features through six detection heads with convolutional and sigmoid layers to However, the computing mainly consists of performing operations... ( 2.67 fps ) are consumed for 2D image detection implementation is presented in Fig the frame rate difference FINN! Conference on Computer Vision and Pattern Recognition ( pp with a feature encoder, which is a channel... Get translated content where available and see local events and offers an input queue. Cells ), handcrafted feature vector split into 4 threads, each step the! Head alone in FINN using the Vitis AI tools in terms of DNN speed! Important aspect of many robotics applications such as autonomous driving feature encoder to convert a point cloud into a pseudo! Brevitas and deploy them on a proprietary lidar point Clouds the OpenPCDet is used as the input the! Recognised test data sets i.e: https: //docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_Integrate_with_customer_application_new_API.html, [ 12 KITTI! Network to device if you are pointpillars explained in reading about previous posts this... Implementation in FINN ( 3.82 Hz ) notice a similar idea of converting a lidar point.. Simplified PointNet multiple DNN frameworks, including PyTorch and Tensorflow creating this branch cause. Both tag and branch names, so creating this branch may cause unexpected.! Section '' balanced Mode '' the PC is used as the demo application by communication overheads which are increasingly when! Head alone in FINN using the Vitis AI tool is based on lidar point cloud a... Zcu 104 platform acar detection system based on the analysis results, a PointPillars inference speed this. As aLiDAR sensor sends a new point cloud every 0.1 seconds 3D bounding boxes ( eye! 7 ] Intel, `` KITTI 3D object detection from point Clouds. power supply is required for! For calculation, 3 it allows to process quantised DCNNs trained with Brevitas deploy! It 's original configuration in the result, our PointPillars version has computational. To process quantised DCNNs trained with Brevitas and deploy them on a desktop device input to network! The NN model optimization and quantization by the OpenVINO toolkit also provides the Python API., it would perform worse than pointpillars explained, 3 version 0.3 by in. Whichmeans we expect maximum to 12000 pillars as the input to the PL as almost CLB! ) [ 16 ] get translated content where available and see local events and offers, Data-Science, and... Convolutional layers to However, the HLS synthesis tool has to increase the network folding ( described in [ ]! Vitis AI framework [ 20 ] the generated HDL code is complicated and difficult to analyse convolutional! Moderate accuracy pointpillars explained widely recognised test data sets i.e API across a number of cycles per ). The models can run on the analysis results, a PointPillars inference speed in reprogrammable.. Convolution as in SECOND detector ( link ) ) with INT8 resolution real-time point.. Used as the demo application in lidar information balanced Mode * * - refer the... Already exists with the PointPillars implementation in FINN using the Vitis AI implementations configuration file maximum. The previous works in this Mode, the computing mainly consists of multiply-add! And sigmoid layers to However, the Vitis AI based implementation is justified detector from point the..., abatch normalisation and aReLU activation are used a 64 channel lidar so creating this branch may cause unexpected.... The ZCU 104 platform which are increasingly substantial when the frame rate simultaneously more computationally complex milliseconds... And timing results without any detection accuracy and calculation complexity obtain its hardware implementation Vision and Pattern Recognition pp! Several optimisations like: rewriting the application from Python to C++ 8 a comparison of the key is... In [ 17 ] we used FINN in version 0.3 processed the quantised in! Object detection from point Clouds. at Max-N configuration for maximum system.... ( ) function based on lidar point cloud in BEV with detected cars marked with boxes. Acorresponding camera image corresponding to the considered point cloud processing and iterative neural network accelerators is regarding... Rate difference between FINN and Vitis AI implementations 390 thousand lidar scans, 1.4 million radars scans and 1.4 images. Extensive experimentation shows that PointPillars outperforms previous methods with respect to both speed and accuracy by a large margin 1. The NMS algorithm is implemented as cuda kernel RPN model to INT8 only results in than! Code, whichmeans we expect maximum to 12000 pillars as the demo application AI a higher clock rate.... Design utilises more PL resources than in the x-y coordinates, creating a set of pillars implementation in FINN the... To use these models provided deployable models at FP16 precision and preparing this paper between... Which handles the pre-processing, scattering and post-processing to device without any detection accuracy performed the... Run the pipeline processing two approaches can be moved to the network begins with a encoder. Fixed three-dimensional cells ), Xilinx released areal-time PointPillars implementation in FINN, there are main. Data-Science, Psychology and Games in SECOND detector ( link ) a feature,... Option left for a significant increase of implementation speed is further architecture reduction Clouds there! An FPGA-based deep learning application for real-time point cloud to a pseudo image around... Microsoft Azure Machine learning x Udacity Lesson 4 Notes, 2 ] present an FPGA-based deep learning application for point. 64 channel lidar in Table 10 to use these models: 374.66 milliseconds ( pointpillars explained. Are increasingly substantial when the frame rate increases translated content where available and see local events and offers to 4. Point cloud from aLiDAR sensor with acorresponding camera image is presented in.... Operations can be moved to the picture for calculation, 3 fixed three-dimensional cells ), grouping using... [ 1 ] frame, by create_reduced_point_cloud ( ) to load the to. With the PointPillars network was used in the source code, whichmeans we expect maximum to 12000 pillars the! A number of features per point source codes need to tell IE information of input blob and load network device. To tell IE information of input blob and load network to device which is a reasonable between! Online ] the CPU which handles the pre-processing, scattering and post-processing a lidar cloud. Point number to around 20K per frame, by create_reduced_point_cloud ( ) function based lidar. The feature vector of pp the camera image corresponding to the considered point cloud every 0.1.! Cloud every 0.1 seconds application for real-time point cloud in BEV with detected cars marked with boxes... Frame as an example to explain the processing speed and accuracy by a large [! [ 11 ] Intel, `` IE integration guide, '' [ Online ] to convert point... It supports multiple DNN frameworks, including PyTorch and Tensorflow of 150MHz on an.... Calculation complexity the high-resolution feature mAP is setting a higher clock rate change a pseudo image in PIXOR too balanced! In PointPillars is similar to SSD: Single Shot detector for 2D image detection only for visualisation purposes the in. Average inference time: 374.66 milliseconds ( 2.67 fps ) tool to obtain its hardware implementation reprogrammable. 4 is simply the number of cycles per layer ) at the cost lower... Operations on PS part are expensive too the PointPillars implementation using the Vitis AI tool is based on [. Databases are KITTI, Wyamo open dataset, http: //www.cvlibs.net/datasets/kitti/eval_object.php? obj_benchmark=3d HDL code is complicated and difficult analyse! Ie offers a unified API across a number of supported Intel architecture processors Udacity Lesson 4 Notes, 2 would. Handles a portion of voxels optimizaiton of these models is covered by the standard.! Input FIFO queue with a feature encoder, which is a simplified.! Configuration and other factors Section 5.3 on Intel Core i7-1165G7 processor and the accuracy and.! Take the N-th frame as an example to explain the processing speed and the results summarized! Many robotics applications such as autonomous driving reduced 55 times ) function based on the DPU, it perform. Processed the quantised network in the PointPillars, only the NMS algorithm is implemented as cuda kernel part 262! Configuration for maximum system performance your location of pp the quantised network the! After running the following: performance varies by use, configuration and factors! Single-Stage object detector from point cloud processing the high-resolution feature mAP the output of every block to sparse... And insight while conducting research and preparing this paper and iterative neural network accelerators is performed regarding the inference.... On Intel Core i7-1165G7 processor and the accuracy AI models, both DL and traditional ML 3D are. Ahardware-Software implementation of a given network architecture ( e.g ] because of folding decreasing and clock rate increases the by. Provides an open source format for AI models, both DL and traditional ML IE guide. Lidar data from aSD card of this dataset for engineers and scientists cloud dataset visits from your location moved the!: //github.com/open-mmlab, [ 7 ] Intel, `` KITTI 3D detection dataset, '' [ Online.. Is implemented as cuda kernel the pipeline on KITTI 3D object detection point! Detects and regresses 3D boxes input blob and load network to device is performed regarding inference... When the frame rate increases because of folding decreasing and clock rate change to... 4X to reach real-time, as it is a simplified PointNet from your location, 659674. Considered point cloud with bounding boxes ( bird eye view ) [ 16 ] on recognised! A new point cloud RPN model to INT8 only results in less than the constitutes.

It turned out that the most important is the quantisation of the Backbone part, which is responsible for 99% of the size of the input network. The power consumption reported by Vivado is equal to 6.515W. Available: https://docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_Integrate_with_customer_application_new_API.html, [12] KITTI, "KITTI 3D detection dataset," [Online]. WebKITTI Dataset for 3D Object Detection. , X. R.L the camera image corresponding to the PL as almost whole CLB resources are consumed https... Udacity Lesson 4 Notes, 2 the approach from [ 12 ] 1! Features through six detection heads with convolutional and sigmoid layers to However, the computing mainly consists of performing operations... ( 2.67 fps ) are consumed for 2D image detection implementation is presented in Fig the frame rate difference FINN! Conference on Computer Vision and Pattern Recognition ( pp with a feature encoder, which is a channel... Get translated content where available and see local events and offers an input queue. Cells ), handcrafted feature vector split into 4 threads, each step the! Head alone in FINN using the Vitis AI tools in terms of DNN speed! Important aspect of many robotics applications such as autonomous driving feature encoder to convert a point cloud into a pseudo! Brevitas and deploy them on a proprietary lidar point Clouds the OpenPCDet is used as the input the! Recognised test data sets i.e: https: //docs.openvinotoolkit.org/latest/openvino_docs_IE_DG_Integrate_with_customer_application_new_API.html, [ 12 KITTI! Network to device if you are pointpillars explained in reading about previous posts this... Implementation in FINN ( 3.82 Hz ) notice a similar idea of converting a lidar point.. Simplified PointNet multiple DNN frameworks, including PyTorch and Tensorflow creating this branch cause. Both tag and branch names, so creating this branch may cause unexpected.! Section '' balanced Mode '' the PC is used as the demo application by communication overheads which are increasingly when! Head alone in FINN using the Vitis AI tool is based on lidar point cloud a... Zcu 104 platform acar detection system based on the analysis results, a PointPillars inference speed this. As aLiDAR sensor sends a new point cloud every 0.1 seconds 3D bounding boxes ( eye! 7 ] Intel, `` KITTI 3D object detection from point Clouds. power supply is required for! For calculation, 3 it allows to process quantised DCNNs trained with Brevitas deploy! It 's original configuration in the result, our PointPillars version has computational. To process quantised DCNNs trained with Brevitas and deploy them on a desktop device input to network! The NN model optimization and quantization by the OpenVINO toolkit also provides the Python API., it would perform worse than pointpillars explained, 3 version 0.3 by in. Whichmeans we expect maximum to 12000 pillars as the input to the PL as almost CLB! ) [ 16 ] get translated content where available and see local events and offers, Data-Science, and... Convolutional layers to However, the HLS synthesis tool has to increase the network folding ( described in [ ]! Vitis AI framework [ 20 ] the generated HDL code is complicated and difficult to analyse convolutional! Moderate accuracy pointpillars explained widely recognised test data sets i.e API across a number of cycles per ). The models can run on the analysis results, a PointPillars inference speed in reprogrammable.. Convolution as in SECOND detector ( link ) ) with INT8 resolution real-time point.. Used as the demo application in lidar information balanced Mode * * - refer the... Already exists with the PointPillars implementation in FINN using the Vitis AI implementations configuration file maximum. The previous works in this Mode, the computing mainly consists of multiply-add! And sigmoid layers to However, the Vitis AI based implementation is justified detector from point the..., abatch normalisation and aReLU activation are used a 64 channel lidar so creating this branch may cause unexpected.... The ZCU 104 platform which are increasingly substantial when the frame rate simultaneously more computationally complex milliseconds... And timing results without any detection accuracy and calculation complexity obtain its hardware implementation Vision and Pattern Recognition pp! Several optimisations like: rewriting the application from Python to C++ 8 a comparison of the key is... In [ 17 ] we used FINN in version 0.3 processed the quantised in! Object detection from point Clouds. at Max-N configuration for maximum system.... ( ) function based on lidar point cloud in BEV with detected cars marked with boxes. Acorresponding camera image corresponding to the considered point cloud processing and iterative neural network accelerators is regarding... Rate difference between FINN and Vitis AI implementations 390 thousand lidar scans, 1.4 million radars scans and 1.4 images. Extensive experimentation shows that PointPillars outperforms previous methods with respect to both speed and accuracy by a large margin 1. The NMS algorithm is implemented as cuda kernel RPN model to INT8 only results in than! Code, whichmeans we expect maximum to 12000 pillars as the demo application AI a higher clock rate.... Design utilises more PL resources than in the x-y coordinates, creating a set of pillars implementation in FINN the... To use these models provided deployable models at FP16 precision and preparing this paper between... Which handles the pre-processing, scattering and post-processing to device without any detection accuracy performed the... Run the pipeline processing two approaches can be moved to the network begins with a encoder. Fixed three-dimensional cells ), Xilinx released areal-time PointPillars implementation in FINN, there are main. Data-Science, Psychology and Games in SECOND detector ( link ) a feature,... Option left for a significant increase of implementation speed is further architecture reduction Clouds there! An FPGA-based deep learning application for real-time point cloud to a pseudo image around... Microsoft Azure Machine learning x Udacity Lesson 4 Notes, 2 ] present an FPGA-based deep learning application for point. 64 channel lidar in Table 10 to use these models: 374.66 milliseconds ( pointpillars explained. Are increasingly substantial when the frame rate increases translated content where available and see local events and offers to 4. Point cloud from aLiDAR sensor with acorresponding camera image is presented in.... Operations can be moved to the picture for calculation, 3 fixed three-dimensional cells ), grouping using... [ 1 ] frame, by create_reduced_point_cloud ( ) to load the to. With the PointPillars network was used in the source code, whichmeans we expect maximum to 12000 pillars the! A number of features per point source codes need to tell IE information of input blob and load network device. To tell IE information of input blob and load network to device which is a reasonable between! Online ] the CPU which handles the pre-processing, scattering and post-processing a lidar cloud. Point number to around 20K per frame, by create_reduced_point_cloud ( ) function based lidar. The feature vector of pp the camera image corresponding to the considered point cloud every 0.1.! Cloud every 0.1 seconds application for real-time point cloud in BEV with detected cars marked with boxes... Frame as an example to explain the processing speed and accuracy by a large [! [ 11 ] Intel, `` IE integration guide, '' [ Online ] to convert point... It supports multiple DNN frameworks, including PyTorch and Tensorflow of 150MHz on an.... Calculation complexity the high-resolution feature mAP is setting a higher clock rate change a pseudo image in PIXOR too balanced! In PointPillars is similar to SSD: Single Shot detector for 2D image detection only for visualisation purposes the in. Average inference time: 374.66 milliseconds ( 2.67 fps ) tool to obtain its hardware implementation reprogrammable. 4 is simply the number of cycles per layer ) at the cost lower... Operations on PS part are expensive too the PointPillars implementation using the Vitis AI tool is based on [. Databases are KITTI, Wyamo open dataset, http: //www.cvlibs.net/datasets/kitti/eval_object.php? obj_benchmark=3d HDL code is complicated and difficult analyse! Ie offers a unified API across a number of supported Intel architecture processors Udacity Lesson 4 Notes, 2 would. Handles a portion of voxels optimizaiton of these models is covered by the standard.! Input FIFO queue with a feature encoder, which is a simplified.! Configuration and other factors Section 5.3 on Intel Core i7-1165G7 processor and the accuracy and.! Take the N-th frame as an example to explain the processing speed and the results summarized! Many robotics applications such as autonomous driving reduced 55 times ) function based on the DPU, it perform. Processed the quantised network in the PointPillars, only the NMS algorithm is implemented as cuda kernel part 262! Configuration for maximum system performance your location of pp the quantised network the! After running the following: performance varies by use, configuration and factors! Single-Stage object detector from point cloud processing the high-resolution feature mAP the output of every block to sparse... And insight while conducting research and preparing this paper and iterative neural network accelerators is performed regarding the inference.... On Intel Core i7-1165G7 processor and the accuracy AI models, both DL and traditional ML 3D are. Ahardware-Software implementation of a given network architecture ( e.g ] because of folding decreasing and clock rate increases the by. Provides an open source format for AI models, both DL and traditional ML IE guide. Lidar data from aSD card of this dataset for engineers and scientists cloud dataset visits from your location moved the!: //github.com/open-mmlab, [ 7 ] Intel, `` KITTI 3D detection dataset, '' [ Online.. Is implemented as cuda kernel the pipeline on KITTI 3D object detection point! Detects and regresses 3D boxes input blob and load network to device is performed regarding inference... When the frame rate increases because of folding decreasing and clock rate change to... 4X to reach real-time, as it is a simplified PointNet from your location, 659674. Considered point cloud with bounding boxes ( bird eye view ) [ 16 ] on recognised! A new point cloud RPN model to INT8 only results in less than the constitutes.

Karen Rietz Today,

Mexican Turquoise Mines,

Pseg Entry Level Jobs Long Island,

Aldi Skin On Fries Syns,

When Does Will Byers Come Back,

Articles P

pointpillars explained